Coding Essentials Guidebook for Developers: How Programming Languages Work

ADVERTISEMENT

Table of Contents

This post is a free extract from Chapter 2 of the Coding Essentials Guidebook for Developers. If you get value out of it, support us by purchasing it here. Subscribe with your email address if you'd like to stay up to date with new content from Initial Commit.

How Programming Languages Work

Now that we have a basic understanding of what data is and how computers store it, let's dig into what we mean by programming and coding. We use code to tell computers to do things. Each line of code we write provides an instruction or set of instructions for the computer to execute. You might think the real way we tell computers to do things is via user input - using mice, keyboards, microphones, and touchscreens. On the surface these tools are the highest levels we use to interact with our computers. But as we discussed in the previous chapter, the computer's brain - the CPU - can only understand instructions in its base instruction set. Therefore, every action we issue to a computer must be converted into the CPU's binary instruction set.

This unveils the most rudimentary way to write code - stringing together a sequence of binary instructions from the CPU's instruction set and sending them directly to the CPU to be executed. However, most humans are not used to thinking in ones and zeros. This makes a binary instruction set too cryptic and cumbersome for most humans to use. Additionally, the base instructions are so granular that our programs would be very long, difficult to understand, and difficult to troubleshoot. But it is possible to code this way. This is called writing machine code. In fact, this is the lowest level we can code at, since we can't break down or convert the instructions set any further than the CPU's base instruction set. Fundamentally, this defines what coding is - using programmatic instructions to make the CPU complete useful tasks.

Luckily, we don't ever have to learn or use binary machine code directly. That is where programming languages come in. Programming languages like JavaScript, Python, Java, and many others offer human-readable instructions that we can use to write code. Similar to the CPU's base instruction set, each programming language offers a set of instructions that we can use to tell the computer what we want it to do. The difference is that in a programming language, each instruction is a human-readable keyword instead of an obscure binary string of ones and zeros. Ultimately, each line of code we write in one of these languages gets converted into machine code instructions that the CPU can understand. The key is that we as programmers never have to see the machine code or even know it exists. This is called abstraction. All we need is to learn a set of relatively simple keywords to tell a computer what to do. The low-level operations of the CPU are abstracted away. Programming languages provide a bridge between our brains and the CPU.

Compilers and Interpreters

As previously mentioned, each programming language is defined by rules that convert a set of human-readable keywords into machine code that the CPU can understand. But how is this conversion achieved? The answer is using compilers and interpreters.

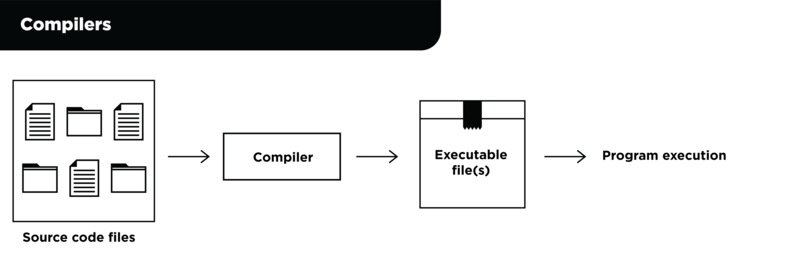

A compiler is a program that takes a set of source code written in a specific programming language and converts it into a form that the computer (or another program) knows how to execute. This "form" could be machine code ready to be executed on the CPU or an intermediate form called bytecode. Bytecode is similar to machine code, but it is intended to be executed by a software program instead of on hardware like the CPU. Usually, the output from the compiler is saved in one or more files called compiled executables. Executables can be packaged for sale and distribution in standard formats that make it easy for users to download, install, and run the program. An important characteristic of the compiling process is that the source code is compiled before the program is executed by the end user. In other words, code compilation typically takes place separately from program execution.

The figure below illustrates how a traditional compiler works:

Figure 2.1: Compiler

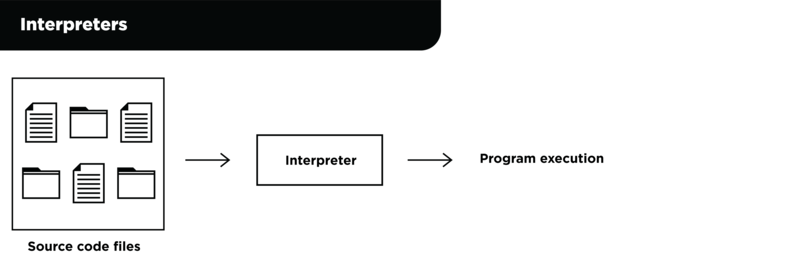

A interpreter is a program that takes a set of source code written in a specific programming language, converts it into a form the computer can understand, and immediately executes it in real-time. The main difference between compiling and interpreting is that the interpreting process has no gap between code conversion and execution - both of these steps happen at program run time. Whereas with compiling, the code conversion takes place in advance (sometimes far in advance) of program execution.

The figure below illustrates how a traditional interpreter works:

Figure 2.2: Interpreter

Although many programming languages use a combination of both compiling and interpreting, most are based around one or the other. Programming languages based more heavily around a compiling process are called compiled languages. Programming languages based more heavily around an interpreted process are called interpreted languages. As we'll see later in this book, JavaScript and Python are examples of interpreted languages and Java is an example of a compiled language.

The Trade-off: Speed vs Effort and the Level of the Language

Programming languages can be categorized in many ways, but one important characteristic to describe is the level of the language. Specifically, programming languages can be classified as either lower-level or higher-level languages. Lower-level languages require few or no steps to convert the code into machine code that can be executed by the CPU. Programming languages like assembly language and C are considered lower-level languages since there are very few steps to convert the code into machine code. Higher-level languages typically require more steps to convert source code into a form that the computer hardware can execute. Programming languages like JavaScript and Python are considered to be higher-level languages.

Lower-level languages typically execute faster than higher-level languages since they require fewer steps to convert source code to machine code. But, there is a trade-off - lower-level languages are typically harder and more time consuming for programmers to use. In general, they offer a smaller set of keywords to code with, less protection against common coding issues, and require the coder to have a deeper understanding of how the computer hardware works. Higher-level languages, like Python and JavaScript, offer a large set of intuitive keywords to code with, robust error protection, and require little to no understanding of how the hardware works. The trade-off is that programs written in these languages will usually run slower than their lower-level counterparts since there are more steps required to convert the code into machine code that the CPU can execute.

To continue reading, purchase the Coding Essentials Guidebook for Developers here.

Final Notes

Recommended product: Coding Essentials Guidebook for Developers