The Evolution of Version Control System (VCS) Internals - Part 2

ADVERTISEMENT

Table of Contents

- Introduction

- Perforce Helix Core - Second Generation

- Background

- Sample Perforce Helix Core History File

- BitKeeper - Third Generation

- Architecture

- Darcs - Darcs Advanced Revision Control System - Third Generation

- Basic Commands

- Monotone - Third Generation

- Background

- Bazaar - Third Generation

- Fossil - Third Generation

- Summary

Introduction

This article is the second in our series comparing the technical details of the most historically significant Version Control Systems (or VCS). If you haven't yet, we recommend checking out the first article in the series.

In this second article, we will discuss six additional VCS:

Perforce Helix Core - Second Generation

Background

Perforce Helix Core is a proprietary VCS created, owned, and maintained by Perforce Software, Inc. It is typically set up using a centralized model although it does offer a distributed model option. It is written in C and C++, and was initially released in 1995. It is primarily used by large companies that track and store a lot of content using large binary files, as is the case in the video game development industry. Although Helix Core is typically cost prohibitive for smaller projects, Perforce offers a free version for teams of up to 5 developers.

Architecture

Perforce Helix Core is set up as a server/client model. The server is a process called p4d which waits and listens for incoming client connections on a designated port, typically port 1666. The client is a process called p4 which comes in both command-line and GUI flavors. Users run the p4 client to connect to the server and issue commands to it. Support is available for various programming language APIs, including Python and Java. This allows automated issuance and processing of Helix Core commands via scripts. Integrations are also available for IDEs like Eclipse and Visual Studio, allowing users to work with version control from within those tools.

The Helix Core Server manages repositories referred to as depots, which store files in directory trees, not unlike Subversion (SVN). Clients can checkout sets of files and directories from the depots into local copies called workspaces. The atomic unit used to group and track changes in Helix Core depots is called the changelist. Changelists are similar to Git commits. Helix Core implements two similar forms of branching - branches and streams. Branches are conceptually similar to what we are used to - separate lines of development history. A stream is a branch with added functionality that Helix Core uses to provide recommendations on best merging practices throughout the development process.

When a file is added for tracking, Helix Core classifies it using a file type label. The two most commonly used file types are text and binary. For binary files, the entire file content is stored each time the file is stored. This is a common VCS tactic for dealing with binary files which are not amenable to the normal merge process, since manual conflict resolution is usually not possible.

For text files, only the deltas (changes between revisions) are stored. Text file history and deltas are stored using the RCS (Revision Control System) format, which tracks each file in a corresponding ,v file in the server depot. This is similar to CVS, which also leverages RCS file formats for preserving revision history. Files are often compressed using gzip when added to the depot and decompressed when synced back to the workspace.

Note - I was surprised that a longstanding proprietary software like Perforce Helix Core would leverage such an essential component - its delta and history file backend - from a seemingly antiquated and open-source root like RCS. After thinking about it, it may not be that surprising considering many open-source licenses allow for rebranding and reselling for commercial purposes without restriction. This is a testament to the power of some of the first generation VCS like RCS and also highlights their ongoing practical importance.

Basic Commands

Below is a list of the most common Helix Core commands.

p4d -r ./server/ -p localhost:1666: Start the Helix Core server process locally on port 1666, storing server data in ./server/ directory.

p4 depot -t stream <depot-name>: Use the Helix Core client to to create a depot of type stream.

p4 stream -t mainline //<depot-name>/<stream-name>: Create a mainline stream in the specified depot.

p4 streams: List all streams.

p4 client -S //<depot-name>/<stream-name>: Create a local client workspace and bind it to a stream.

p4 clients -S //<depot-name>/<stream-name>: List all workspaces bound to a stream.

p4 add <filename.ext>: Add a new file for tracking (technically adding it to a changelist), which can then be submitted to the depot.

p4 edit <filename.ext>: Check out an existing tracked file for editing.

p4 delete <filename.ext>: Delete an existing tracked file.

p4 submit: Submit the active changelist to the Helix Server depot for storage.

p4 sync: Sync the workspace with the corresponding stream in the depot.

For more information on Perforce Helix Core, see the Helix Core User Guide.

Sample Perforce Helix Core History File

head 1.3;

access ;

symbols ;

locks ;comment @@;

1.3

date 2020.02.22.10.49.48; author p4; state Exp;

branches ;

next 1.2;

1.2

date 2020.02.22.10.48.23; author p4; state Exp;

branches ;

next ;

desc

@@

1.3

log

@@

text

@cheese.

more cheese.

@

1.2

log

@@

text

@d2 1

@BitKeeper - Third Generation

Background

BitKeeper is a distributed VCS created and maintained by BitMover, Inc. It is written primarily in C along with some Tcl. It was initially released in 2000 and was originally proprietary software, until BitMover open-sourced it in 2016. Linus Torvalds, the creator of Linux and Git, used BitKeeper for Linux kernel development in the early 2000's due to its distributed model and his distaste for CVS, the previous VCS used to track the Linux codebase. Primarily for licensing reasons, the Linux development community switched to using Git after Linus Torvalds created it in 2006.

Architecture

BitKeeper provides a peer-to-peer distributed model for version control that leverages SCCS (Source Code Control System) in the backend. This is another interesting case of a more modern third generation VCS leveraging a first generation VCS for some core functionality. SCCS tracks revisions to each file in a corresponding history file called an s-file, since the filename is prefixed using s.. BitKeeper slightly modifies this convention by instead post-fixing the filename with a ,s. SCCS stores deltas using a method called interleaved deltas. For more information on SCCS, see the SCCS section in the first article in this series.

However, BitKeeper is not simply a wrapper for SCCS. It greatly extends the functionality by adding layers for tracking commits and enabling a distributed model. One limiting factor of SCCS is that changes to multiple files cannot be grouped together into a single commit. Revisions for each file are tracked independently in their own s-file. A new file revision is stored in a file's corresponding s-file each time a change is checked in. BitKeeper preserves this behavior, but after files revisions are checked in, it allows them to be committed as a group. In BitKeeper terminology, a commit is referred to as a changeset and changesets are also stored as s-files.

When a new BitKeeper repository is initialized in an empty directory, the following subdirectory tree structure is created:

BitKeeper/

deleted/

etc/

config

gone

ignore

log/

NFILES

ROOTKEY

TIP

cmd_log

features

repo_id

scandirs

x.id_cache

readers/

tmp/

.bk/

BitKeeper/

...

SCCS/

ChangeSet,1

ChangeSet,2

ChangeSet,sWe will highlight a few of the important items in this structure. The BitKeeper/etc/config file contains general repository configuration information and settings, including a description of the repository and the email address of the local developer. The Bitkeeper/log/TIP file contains a reference to the most recent changeset in the repository. The Bitkeeper/log/cmd_log file contains a history log of all BitKeeper commands executed on the repository.

The hidden .bk/SCCS/ directory is where all of the s-files and changeset files created by BitKeeper are stored. Therefore this folder is essentially the repository itself. As new files are added, modified, and checked-in, corresponding s-files are created and updated in this folder. When changes are committed with descriptive comments, they are stored in a set of ChangeSet, history files, which also use the s-file format.

Being a third generation VCS, no BitKeeper repository needs to be set up as a central repository, although choosing a centralized development workflow is totally fine. BitKeeper allows cloning, pushing, and pulling of repositories over networks and the Internet. Only committed changesets - as opposed to checked-in changes - are transferred via these methods.

One difference between BitKeeper and Git is that Git encourages lightweight branching and can support many branches as a part of a single repository. BitKeeper, on the other hand, typically requires a separate clone of the repository to represent a new line of development. Pushing, pulling, and merging can take place between these workspaces as expected. Repositories can be designated as parent or child repositories, which act as default sources for pushes, pulls, and merges, avoiding the need to specify these details each time those commands are run.

Bitkeeper can be installed on Mac OSX via the Homebrew package manager. Download links for several platforms seem to be broken on the BitKeeper downloads page, but some do appear to be available using this link to the file structure for downloads. Note that I have not tested these myself. Once installed, Bitkeeper can be run from the Command Line using the bk <subcommand> command.

Basic Commands

Below is a list of the most common BitKeeper commands (note that many of these commands have a corresponding GUI version, which opens a visual wizard to help):

bk init: Initialize a directory as a BitKeeper repository.

bk new <filename.ext>: Check-in a new file for revision control.

bk get <filename.ext>: Check out a file from the corresponding history file in readonly mode.

bk edit <filename.ext>: Check out a file from the corresponding history file for editing.

bk delta: Check-in working directory changes (note at this point the changes have not been committed).

bk commit: Commit outstanding revisions as a new changeset (only revisions that not been committed yet).

bk pending: List changes that will be included in the next commit.

bk clean: Remove working directory files that haven't changed since the last revision.

bk log: Display the log of file revisions and changesets in the repository.

bk cmdlog: Display the log of commands executed against in repository.

bk ignore: Tell BitKeeper to ignore a particular file pattern.

bk push: Transfer changesets from the local repository to its parent repository.

bk pull: Transfer changesets from the parent repository to the local repository. Merges will be done automatically if possible.

bk merge <local> <ancestor> <remote> <merge>: Merge local and remote changes to an ancestor.

bk diff: Display changes between working directory and existing revisions in the repository.

For more information on BitKeeper internals, see the BitKeeper manual and the BitKeeper source notes.

Sample BitKeeper History File (obtained from the BitKeeper source notes)

^AH41861

^As 1/2/2

^Ad D 1.3 09/12/26 11:21:35 rick 4 3<-- rev 1.3, serial 4, parent 3

^Ac third change

^AcK04692

^Ae

^As 2/1/2

^Ad D 1.2 09/12/26 11:20:59 rick 3 2<-- rev 1.2, serial 3, parent 2

^Ac first-change

^AcK03837

^Ae

^As 3/0/0

^Ad D 1.1 09/12/26 11:19:41 rick 2 1<-- rev 1.2, serial 2, parent 1

^Ac BitKeeper file /home/bk/rick/fastpatch-weave-D/src/foo

^AcF1

^AcK01234

^AcO-rw-rw-r--

^Ae

^As 0/0/0

^Ad D 1.0 09/12/26 11:19:41 rick 1 0<-- root 1.0, serial 1, no parent

^AcBlm@lm.bitmover.com|ChangeSet|19990319224848|02682

^AcHwork.bitmover.com

^AcK14085

^AcPsrc/foo

^AcR16a32aed49f14fe1

^AcV4

^AcX0xb1

^AcZ-08:00

^Ae

^Au

^AU

^Af e 0

^Af x 0xb1

^At

^AT<-- The end of title block (^At-^AT) marks beginning of weave

^AI 4

start change 3 with new line

^AE 4

^AI 2

one

^AI 3

^AD 4

first new line

^AE 3

two

^AE 4

^AD 3

three

^AE 3

^AI 3

replace third line

^AE 3

^AE 2

^AI 1

^AE 1Darcs - Darcs Advanced Revision Control System - Third Generation

Background

Darcs is a third generation VCS that was released by David Roundy in 2003 with the goal of creating an easy to use, free and open source distributed VCS. One unique aspect of Darcs is that it is written in Haskell, a purely functional programming language. If you are curious about Haskell and have only used imperative languages like C, Java, and Python, check out this tutorial.

Architecture

Unlike Perforce and BitKeeper, Darcs does not leverage a first generation VCS to implement backend functionality. However, Darcs preserves the notion of the working directory, where developers can make changes to a checked out copy of the code. Darcs takes a somewhat unique philosophy when it comes to thinking about revisions. A contiguous block of changed text in a file is called a hunk. Multiple hunks across files can be recorded together as a patch, which represents a delta (or set of hunks) of one or more files. A patch is essentially Darcs' term for a commit in other VCS's. But patches in Darcs work quite differently than the standard commits we are used to.

In Git, each commit references one or more parent commits to form a continuous chain of development history. Darcs patches can be thought of in more abstract terms - it is not a requirement for a Darcs patch to have a parent. Therefore, patches can be thought of as standalone units, or sequences of units, that can be easily reordered, undone (even without being last in the history), cherry-picked, and merged.

However, a patch that includes line changes that are bordering or overlapping with prior changes does need to record that it is dependent on the patch containing that prior change. In this sense, a chain of dependencies can, but is not required to, exist between patches. Note that this is only one example of patch dependencies, there are many other instances in which Darcs applies patch dependencies. This has a couple of noteworthy consequences:

- When undoing (removing) a patch on which other patches depend, all dependents must be removed as well.

- When merging a patch from one repository to another, all of that patch's dependencies must be brought in as well, assuming they don't already exist in the destination.

Both of these points are more easily digested by considering that a patch (i.e. a representation of changes to lines in files) doesn't make sense if the part of the files it is changing aren't available for it to operate on. For example, it doesn't make sense to change a word in a line that was never added in the first place, so the patch that added the line must be present for the patch that changed the line to have meaning.

Unlike Git, Darcs does not support the creation of local branches. In Darcs, creating a new branch essentially amounts to cloning another copy of the repository. However, multiple local copies can be hard linked to share subsets of repository files, which mitigates the redundancy of storing entire duplicate copies.

When a new Darcs repository is initialized in an empty directory, the following subdirectory tree structure is created:

_darcs/

format

hashed_inventory

inventories/

patches/

prefs/

binaries

boring

motd

pristine.hashed/

...The _darcs directory contains all the information Darcs uses to manage and track file revisions. We will highlight a few of the important items in this structure. The inventories/ folder is populated with inventory files that help Darcs piece together the history of the repository. The patches/ is populated with the patch files that are recorded, pulled, or merged into the repository. The prefs/binaries file contains a list of regular expressions that tell Darcs which files should be treated as binary. The prefs/boring file contains a list of regular expressions for files to ignore.

Another way that Darcs differentiates itself from other VCS tools is via its interactive command interface. Most VCS tools are pretty minimalist when it comes to interactivity - output messages are typically very brief and often cryptic. By default, Darcs commands provide verbose output to help speed up the learning curve for users and clarify what actions the users are taking. It is clear that the developers prioritized the user experience.

Darcs also makes it trivial to generate patch bundles that can be sent over email and applied by a remote user. This is useful because it removes the need for repository owners to grant push access to users.

Basic Commands

darcs init: Initialize a directory as a Darcs repository.

darcs add <filename.ext>: Add a new file to track revisions for.

darcs remove <filename.ext>: Remove a file from revision tracking.

darcs move <source> <dest>: Move or rename a tracked file.

darcs record: Record a new patch with changes in the working directory.

darcs whatsnew: Show diff between patches and working directory.

darcs get <darcs-url>: Download a copy of the Darcs repository at the specified URL.

darcs pull <darcs-url>: Retrieve changes (patches) from remote repository.

darcs push <darcs-url>: Transfer patches to remote repository.

darcs send: Generate a patch bundle to be sent over email.

darcs apply <patch>: Apply patches from an email patch bundle.

darcs log: List patches in repository. Can also use darcs changes.

For more information on Darcs internals, see the Darcs model and the Darcs user manual.

Sample Darcs Patch File

[Initial commit.

jacob@initialcommit.io**20200306230059

Ignore-this: a9a505fe1a60127fea644e8a9638b962

] addfile ./cheese.txt

hunk ./cheese.txt 1

+cheese.There is a new project in development called Pijul which was heavily influenced by Darcs and is currently under active development.

Monotone - Third Generation

Background

Monotone is a third-generation VCS that was created in 2002-2003 by Graydon Hoare, who later created the Rust programming language. Nathaniel Smith and Richard Levitte became Monotone's primary maintainers around 2006-2007. Montone's latest stable release was in 2014. Its development seems to have slowed due to the rise in popularity of other VCS, primarily Git. Monotone is written in C and C++.

Monotone is especially significant since it was the first VCS to use SHA1 hashes as identifiers to represent file/tree content, as well as Directed Acyclic Graph (DAG) to describe commits that could be chained together to form a traceable history. These concepts were devised by Jerome Fisher - see this email thread between the developers for some details. These ideas are central to many other VCS tools including Git, which adopted this idea from Monotone. See this email from Linus Torvalds (especially the last paragraph) noting the influence and inspiration that Git derived from Monotone. And this for more details.

Architecture

Monotone stores file deltas where possible instead of whole copies of each content version. The hashes are used as unique identifiers to organize and access content in a local repository. Unlike Git, which stores its repository directly as text files (or pack/index files) on disk, Monotone's repository is an actual SQLite database stored on the local system. Issuing Monotone commands can either interact purely with the files in the workspace (adding file changes), or transfer information back and forth from the database (making or retrieving commits). Information in Monotone databases can be queried using the sqlite3 client.

Monotone represents trees using manifest files. A manifest file in Monotone is a human-readable text file that groups together the paths and SHA1 hashes of changes to be included in a tree snapshot. Manifest files themselves are hashed to yield a unique identifier. When a set of changes is committed, Monotone creates a structure called a revision. A revision includes a reference to the most recent manifest representing the changes and the previous revision ID in the chain. In this way, a history of changes is built-up as new revisions (commits) are made, from the initial commit onwards.

For clarity, it may be useful to compare some of Monotone's terminology to Git's:

| ----------- | ------------------- |

| Git Term | Monotone Equivalent |

| ----------- | ------------------- |

| Blob | File |

| Tree | Manifest |

| Commit | Revision |

| Working Dir | Workspace |

| ----------- | ------------------- |Monotone databases are created independently from Monotone workspaces. The mtn db init --db=:<db-name> command is used to create and and initialize and empty SQLite database that acts as the Monotone database. The :<db-name> format indicates that this will be a managed database that will be stored in the path $HOME/.monotone/databases/<db-name>.mtn by default. Managed databases allow Monotone to assume some default configurations (like location of the database) for convenience.

The next step is to create a workspace using the command mtn --db=:<db-name> --branch=<branch-name> setup <project-name>. This will create a new workspace folder called <project-name> in the current directory and will link it to the specified database. It will also create a new branch with the specified name. It should be noted that branches can never be renamed in Monotone, and to preserve universal uniqueness, branches use a reverse-domain naming convention like com.domain.project-name.

Monotone creates the _MTN subdirectory as a part of the mtn setup command above in order to identify the directory as a Monotone workspace and to store configuration and revision information. The structure of the _MTN directory is as follows:

_MTN/

format

log

options

revisionThis structure is somewhat simpler than other VCS tools we have seen, since the actual content is stored in a separate SQLite database file instead of directly in the _MTN folder. The _MTN/options file is used to store workspace configuration parameters, like the path to the database and the current branch name. After a new file in the workspace is added for tracking via the mtn add command, Monotone temporarily keeps track of this in the _MTN/revision file. Files, manifests, and revisions are compressed during the commit process and saved to the database. Here is the structure of some of the more important tables in the Monotone database schema:

CREATE TABLE files (

id primary key, -- strong hash of file contents

data not null -- compressed contents of a file

);

CREATE TABLE file_deltas (

id not null, -- strong hash of file contents

base not null, -- joins with files.id or file_deltas.id

delta not null, -- compressed rdiff to construct current from base

unique(id, base)

);

CREATE TABLE revisions (

id primary key, -- SHA1(text of revision)

data not null -- compressed, encoded contents of a revision

);

CREATE TABLE revision_ancestry (

parent not null, -- joins with revisions.id

child not null, -- joins with revisions.id

unique(parent, child)

);

CREATE TABLE manifests (

id primary key, -- strong hash of all the entries in a manifest

data not null -- compressed, encoded contents of a manifest

);

CREATE TABLE manifest_deltas (

id not null, -- strong hash of all the entries in a manifest

base not null, -- joins with either manifest.id or manifest_deltas.id

delta not null, -- rdiff to construct current from base

unique(id, base)

);There is a noteworthy difference between Monotone and Git. In Git a branch is simply a reference (ref) or pointer to a specific commit. Therefore, the latest commit(s) must exist on a branch in order to add more via an operation like a push. A common consequence of this is the need to pull down and merge the commits in a remote branch before pushing the branch back up. Git enforces this strict consistency such that there is no way for two people to have two different versions of the same branch after a pull/push operation.

However, in Monotone each commit contains an embedded branch label that identifies what branch it's on. Therefore, in general it is acceptable to have multiple heads of development since Monotone knows that they belong to the same branch. Monotone allows pushing commits to a remote without the requirement of a prior pull and merge. This does mean that the forks must be merged on occasion, dealing with any conflicts in the process. Monotone embodies eventual consistency in which branch consistency is not enforced every step of the way, but happens in the end.

In order to synchronize revisions with remote repositories, public keys must be exchanged so that network transfers (pushes and pulls) can be performed. Repositories are typically made available on port 4691 using the mtn serve command and communicate via a custom protocol called netsync. The most common networking command in monotone is sync, which synchronizes revisions in both directions. Pushes and pulls aren't used as often. Pushes, pulls, and syncs strictly move data between local and remote databases, leaving the workspaces on both ends alone. A separate mtn update command is used to reflect changes from the local database into the workspace.

Monotone uses the concept of a certificate to associate information with revisions. This information can include a descriptive message, date, author, and more. Certificates are also used to guarantee integrity of of revisions by attaching an RSA key (email address) and signature. In Monotone, GPG signing is force enabled with no way to disable it.

As a final note, where other systems like CVS and Git use shell scripts for hooks, monotone uses Lua.

Basic Commands

mtn db init --db=:<db-name>: Create a new, managed SQLite database in the ~/.monotone/databases/ directory.

mtn genkey <key-name>: Generate a public/private keypair for communication and signing.

mtn --db=:<db-name> --branch=<branch-name> setup <project-name>: Set up a new workspace and branch.

mtn add <filename.ext>: Add a new file for tracking.

mtn diff: Show differences between workspace and last revision.

mtn commit --message="message": Commit a new revision to the database.

mtn status: Show information related to the state of the working directory, such as whether files have been changed.

mtn log: Show the revision history for the active branch.

mtn serve: Make a Monotone repository available over a network via netsync.

mtn --db=:<db-name> push "mtn://<server-name>?<branch-name>*": Push revisions to remote database.

mtn --db=:<db-name> pull "mtn://<server-name>?<branch-name>*": Pull revisions from remote database.

mtn --db=:<db-name> sync "mtn://<server-name>?<branch-name>*": Sync revisions with a remote database in both directions.

mtn update: Update the workspace with new revisions from the database.

mtn propagate <source> <dest>: Performs a 3-way merge of unique heads from source and dest branches and their recent common ancestor.

For more information on Monotone internals, see the Monotone docs.

Sample Monotone Revision File

format_version "1"

new_manifest [0000000000000000000000000000000000000002]

old_revision []

add_dir ""

add_file "cheese.txt"

content [1d0abddce2d0522824c169e8aa8979ffcd8a1094]Bazaar - Third Generation

Background

Bazaar, is an open-source, distributed VCS that is a part of the GNU Project. Development and maintenance of Bazaar is sponsored by Canonical. Bazaar's predecessor Baz was originally released in 2005 before the project was taken on by Martin Pool. Bazaar is written primarily in Python. In 2017, Bazaar was forked to a project called Breezy, which added support for Python 3 and Git. More details about Breezy can be found on their website.

Architecture

Bazaar offers the flexibility of choosing either a distributed model similar to Git's, or a centralized model similar to SVN's. The distributed model offers a working directory and full local revision history which enables offline work and commits. The centralized option uses lightweight checkouts that create a local copy of the checked-out revision without the repository structure that tracks the history. Like SVN, this requires the user to be connected to a network in order to commit back to the central repository.

Like most of the VCS we have seen so far, Bazaar understands revisions as sets of changes applied to one of more files. In Bazaar, each revision is uniquely identified using a revision ID built up from the user's email address, commit date, and a hash, such as jacob@initialcommit.io-20200311215957-gtb2wnzcqr69c7u5. In addition, revisions are tagged with sequential revision numbers for human readability. Revision numbers on mainline branches are single sequential integers, like 1, 2, 3, and so on. Merged revisions have a three-part, comma separated revision number format like 1.2.3, where the first number is the mainline revision the change is derived from, second identifies the merged branch, and the third number is the revision number of the merged branch.

Default global configuration options are stored in the ~/.bazaar/bazaar.conf file. Command aliases can be defined in this file as shortcuts for commonly used, long commands. Bazaar commands are written using the format bzr [global-options] command [options and arguments]. The .bzrignore file is used to ignore files that don't need to be tracked.

When a new Bazaar repository is initialized in an empty directory, the following subdirectory tree structure is created:

.bzr/

README

branch-format

branch-lock

checkout/

conflicts

dirstate

format

lock/

shelf/

views

branch/

branch.conf

format

last-revision

lock/

tags

repository/

format

indices/

lock/

obsolete_packs/

pack-names

packs/

upload/The .bzr/checkout/dirstate file contains information about the state of tracked files in the working directory. The .bzr/checkout/conflicts file contains information about conflict resolutions that need to be addressed in the working directory. The .bzr/branch/last-revision file indicates the revision ID of the tip of the current branch. When changes are committed to the repository, Bazaar compresses them into a .pack file format and stores them in the .bzr/repository/packs directory. The pack files are organized via indices and names referenced in the .bzr/repository/indices/ folder and the .bzr/repository/pack-names file. Initially, each revision corresponds to its own pack file, but the packs get periodically recombined in prevent problems due to large numbers of pack files.

In Bazaar, pulling a branch from a remote to a local repository can only be performed if the branches haven't diverged. If they have diverged, the bzr merge <url> command must be used instead. The bzr push <bzr+ssh://server/directory> can be used to deploy a copy of a branch working directory to a specified location.

An interesting feature of Bazaar is the Patch Queue Manager (PQM). PQM is a separate program that runs as a service on a server and waits for emails to come in. These emails must be gnupg signed and usually contain patches that are intended to be merged into public branches. However, the PQM command to run can be customized as the subject line of the email.

Basic Commands

bzr whoami "<name> <email>": Set your name and email that will be stored with revisions.

bzr init: Initialize the current directory as a Bazaar branch (creates the hidden .bzr folder and its contents).

bzr add <filename.ext>: Add a file for revision tracking.

bzr status: Show information related to the state of the working directory, such as which files have been changed.

bzr diff: Show changes between current working directory and last revision.

bzr commit -m 'Commit message': Commit a set of changed files and folders along with a descriptive commit message.

bzr revert: Remove working directory changes made since the head revision.

bzr uncommit: Remove the head revision.

bzr branch <existing-branch> <new-branch>: Create a new local copy of an existing branch.

bzr pull: Bring new commits from a remote repository into the local repository.

bzr merge <url>: Merge commits from a branch with diverged history.

bzr push <bzr+ssh://server/directory>: Deploy a copy of a branch working directory to a specified location.

bzr info: Show information about the current branch.

bzr log: Show the commit history and associated descriptive messages for the active branch.

For more information on Bazaar internals, see the Bazaar docs.

Sample Bazaar Pack File

The contents of pack files are compressed and I wasn't able to generate a clear-text version. If you know how to do this, please drop me a line!

Fossil - Third Generation

Background

Fossil was created by Dwayne Richard Hipp, (also the creator of the SQLite RDBMS), and released in 2006. It is written in C and SQL. Fossil is unique in that it not only provides a fully functional distributed VCS, but also a suite of tools that allow developers to easily host a web portal to collaborate and share information about their projects. These tools include integrated bug tracking, wiki, forum, tech notes, and even a built-in web interface. With all of these web-oriented features, it is a system clearly designed with collaboration efficiency in mind for both technical and non-technical users.

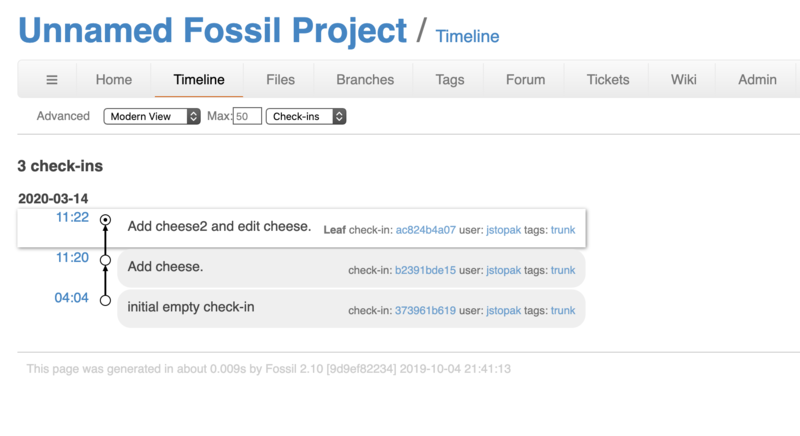

Here is a screenshot of the web portal that can be spun up in a single command:

Figure 1: Fossil Web Portal

As you can see in the image above, the Fossil web portal has built-in pages for displaying the repository timeline history, committed files, branches, tags, a forum where users can create posts, a ticketing system, and a wiki. Fossil's web site and code is actually self-hosting on their own web portal! It's worth checking out!

Architecture

Like Monotone, Fossil uses a SQLite database to store its repository. One or multiple different projects can be tracked in a single SQLite file. Fossil working directories are identified by a hidden SQLite database file called .fslckout (FoSsiL ChecKOUT) in the root of the working directory. This file stores configuration information including a path reference to its corresponding SQLite repository. Here is an example of the table structure of the .fslckout file:

CREATE TABLE vvar(

name TEXT PRIMARY KEY NOT NULL,

value CLOB,

CHECK( typeof(name)='text' AND length(name)>=1 )

);

CREATE TABLE vfile(

id INTEGER PRIMARY KEY,

vid INTEGER REFERENCES blob,

chnged INT DEFAULT 0,

deleted BOOLEAN DEFAULT 0,

isexe BOOLEAN,

islink BOOLEAN,

rid INTEGER,

mrid INTEGER,

mtime INTEGER,

pathname TEXT,

origname TEXT,

mhash TEXT,

UNIQUE(pathname,vid)

);

CREATE TABLE vmerge(

id INTEGER REFERENCES vfile,

merge INTEGER,

mhash TEXT

);

CREATE UNIQUE INDEX vmergex1 ON vmerge(id,mhash);

CREATE TRIGGER vmerge_ck1 AFTER INSERT ON vmerge

WHEN new.mhash IS NULL BEGIN

SELECT raise(FAIL,

'trying to update a newer checkout with an older version of Fossil');

END;

CREATE TABLE sqlite_stat1(tbl,idx,stat);Another similarity between Fossil and Monotone is that developers can push, pull, and sync (move changes in both directions) between local and remote repositories. These transfers happen over HTTP by specifying the URLs and operate purely between repositories, so changes are not immediately observed in the working directories. Since Fossil data transfers use HTTP by default, web browsers can directly access Fossil repositories using the repository URLs.

In Fossil, each file version is referred to as an artifact and is uniquely identified via an artifact ID. An artifact ID is a SHA1 (or more recently SHA3) hash of that file's content, similar to Git. Each commit generates a manifest file, which includes a mapping between file paths and their corresponding artifact ID's, as well as the commit author and date. The manifest file itself is hashed to generate an artifact ID that acts as a commit ID.

Here is the structure of some of the more notable tables in the Fossil repository schema:

CREATE TABLE blob(

rid INTEGER PRIMARY KEY,

rcvid INTEGER,

size INTEGER,

uuid TEXT UNIQUE NOT NULL,

content BLOB,

CHECK( length(uuid)>=40 AND rid>0 )

);

CREATE TABLE delta(

rid INTEGER PRIMARY KEY,

srcid INTEGER NOT NULL REFERENCES blob

);

CREATE TABLE user(

uid INTEGER PRIMARY KEY,

login TEXT UNIQUE,

pw TEXT,

cap TEXT,

cookie TEXT,

ipaddr TEXT,

cexpire DATETIME,

info TEXT,

mtime DATE,

photo BLOB

);

CREATE TABLE filename(

fnid INTEGER PRIMARY KEY,

name TEXT UNIQUE

);

CREATE TABLE tag(

tagid INTEGER PRIMARY KEY,

tagname TEXT UNIQUE

);By default, Fossil operates in autosync mode. This provides a centralized workflow that minimizes the effort required by developers. In autosync mode, commits are automatically pushed to a centralized server after they are committed locally. Before a commit is made, Fossil checks the central repository for new commits and automatically pulls them into the local database. Like in all centralized workflows, consistent network access is required for autosync mode to work effectively. If autosync is disabled, Fossil operates in manual-merge mode. This is preferable for distributed workflows where network access is not guaranteed when committing. In this mode, syncs, pushes, pulls, and merges must be executed manually.

Fossil's web interface has several pages for admin users to enter raw TH1 scripts, SQL scripts, and HTML to customize the ticketing process. TH1 is a lightweight scripting language used by Fossil for processing content for the web interface. See Fossil's page on TH1 for more details. TH1 scripts can also be used to implement hooks before or after certain operations are executed.

Fossil is also able to store unversioned content, which is the state of a file with no revision tracking enabled.

Basic Commands

fossil init <db-filename>: Create an empty SQLite database shell to act as a new repository.

fossil clone <url> <db-filename>: Create a local copy of the Fossil repository at the specified URL.

fossil open <db-filename>: Check-out a working copy from the local repository.

fossil add <filename.ext>: Add a new file for revision tracking.

fossil diff <filename.ext>: Show changes between working directory files and checked-out commit.

fossil commit: Commit a set of changed files and folders to the local repository.

fossil status: Show information related to the state of the working directory, untracked files, modified files, etc.

fossil branch ls: List all branches in repository.

fossil branch new <new-branch> <source-branch>: Create a new branch based on a source branch.

fossil commit --branch <branch>: Commit changes to a new branch.

fossil update <revision>: Checkout the specified revision or branch into the working directory.

fossil merge <branch>: Merge the specified branch into the current branch checked out in the working directory.

fossil undo: Undo the most recent, update, merge, revert, and more.

fossil redo: Undo the most recent undo.

fossil pull <url>: Download new revisions from remote repository but don't merge them into the working directory.

fossil push <url>: Transfer new revisions to remote repository.

fossil sync <url>: Sync revisions with a remote database in both directions.

fossil timeline: Show the commit history and associated descriptive messages for the active branch.

fossil ui: Serve the Fossil repository over local HTTP and open it in a web browser.

fossil serve: Serve the Fossil repository over HTTP and open it in a web browser.

Sample Fossil Database Table Entries

Working directory database .fslckout data in vfile table:

id vid chnged deleted isexe islink rid mrid mtime pathname origname mhash

---------- ---------- ---------- ---------- ---------- ---------- ---------- ---------- ---------- ---------- ---------- ----------

1 6 0 0 0 0 4 4 1584184863 cheese.txt

2 6 0 0 0 0 5 5 1584184877 cheese2.txtRepository database data in artifact table:

rid rcvid size atype srcid hash content

---------- ---------- ---------- ---------- ---------- ---------------------------------------------------------------- ----------

1 1 166 1 373961b619d50de7dee4c15df6966141c10686c688e099fcc2dd7bf198408d10

2 2 8 1 7b59a35cf3e05904f5392f97a8d7a1aa45c620fe9f2723d4710765e2ed68f26c

3 2 266 1 b2391bde15143b5d0726b8853b43e37fe6440d49065ff484ecb3f05adba880b9

4 3 21 1 7ec259c8f64949ed8ac5b9716052d1ed17f8e8f2cde8f6e4eab5b2a38868e367

5 3 9 1 1484b7dfbba4e9b30ef1df94f75e15db4098cf1a8dc95c1bc4c2f8c438d9acee

6 3 365 1 ac824b4a07648728f1c3235ac0244d0503618fd70a1a98c4428453cac1b9f4e9Sample manifest file content:

C Add\scheese2\sand\sedit\scheese.

D 2020-03-14T11:22:17.519

F cheese.txt 7ec259c8f64949ed8ac5b9716052d1ed17f8e8f2cde8f6e4eab5b2a38868e367

F cheese2.txt 1484b7dfbba4e9b30ef1df94f75e15db4098cf1a8dc95c1bc4c2f8c438d9acee

P b2391bde15143b5d0726b8853b43e37fe6440d49065ff484ecb3f05adba880b9

R c5192d70793cc498f4eca52190401de8

U jstopak

Z 432b9e3b81970134705ea607cf616e71For more information on Fossil internals, check out the Fossil docs.

Summary

In this article, we provided a technical comparison of some historically relevant version control systems. If you have any questions or comments, feel free to reach out to jacob@initialcommit.io.

If you're interested in learning more about how Git's code works, check out our Baby Git Guidebook for Developers.

If you're interested in learning the basics of coding and software development, check out our Coding Essentials Guidebook for Developers.

A special thanks to Reddit user u/Teknikal_Domain, who provided expert details and insight that greatly contributed to the writing of this article.

Final Notes

Recommended product: Decoding Git Guidebook for Developers